Breaking Down Barriers: How AI Is Revolutionising Assistive Technology Across Six Critical Domains

Dinis GuardaAuthor

Fri Nov 14 2025

AI assistive tech is here now: 43M vision requests, 1.5B served by hearing solutions, 76% workplace gains. See the revolution in action across 6 domains.

Continued from Chapter 1: "The Untapped Potential: How AI-Powered Assistive Technology Can Transform 2.5 Billion Lives"

Based on research from "AI Inclusivity, Neurodiversity and Disabilities: A Comprehensive White Paper on Artificial Intelligence as a Transformative Force" by Dinis Guarda

From Promise to Practice: AI in Action

In Part 1, we explored the staggering global need for assistive technology, 2.5 billion people today, growing to 3.5 billion by 2050. We examined the economic imperative and the untapped potential of neurodivergent talent. But numbers tell only half the story.

The real transformation happens when AI-powered technologies meet human need at the intersection of innovation and compassion. This is where theory becomes practice, where code becomes capability, and where barriers that have existed for generations begin to crumble.

Welcome to the frontlines of the AI assistive technology revolution.

1. Vision Support: AI Eyes That Truly See

The Challenge

Globally, 253 million people experience vision impairment. For decades, assistive tools offered basic functionality, magnification, high contrast, audio descriptions. But they couldn't answer the fundamental question: What am I looking at, and what does it mean?

The AI Breakthrough

Microsoft's Seeing AI, launched in 2016, transformed smartphones into intelligent visual companions. Now available in 36 languages, the app goes beyond simple object recognition to provide contextual understanding: reading text aloud, identifying currency, recognising faces and emotions, and describing entire scenes with remarkable nuance.

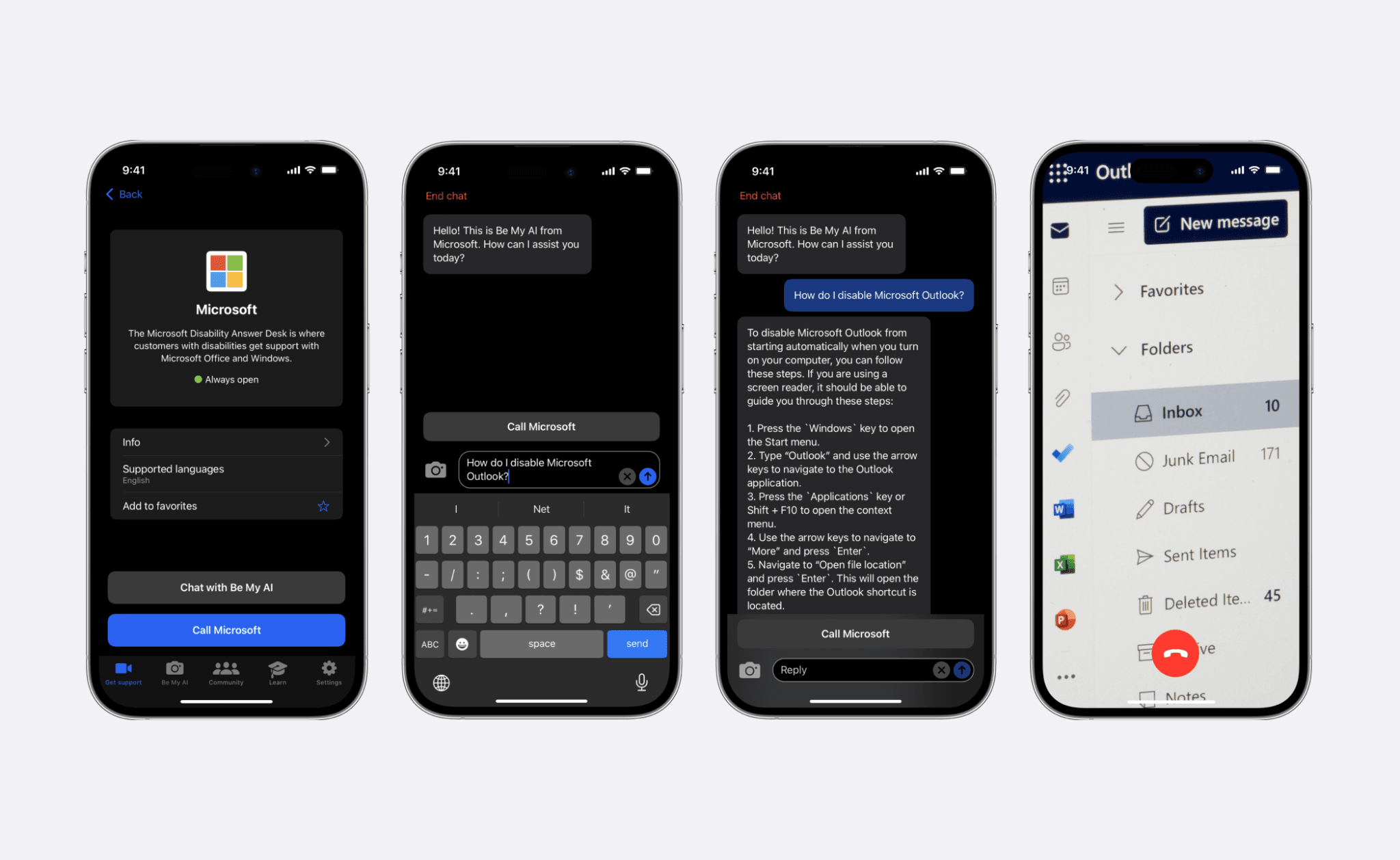

But the true game-changer emerged from an unlikely source: a Danish startup called Be My Eyes.

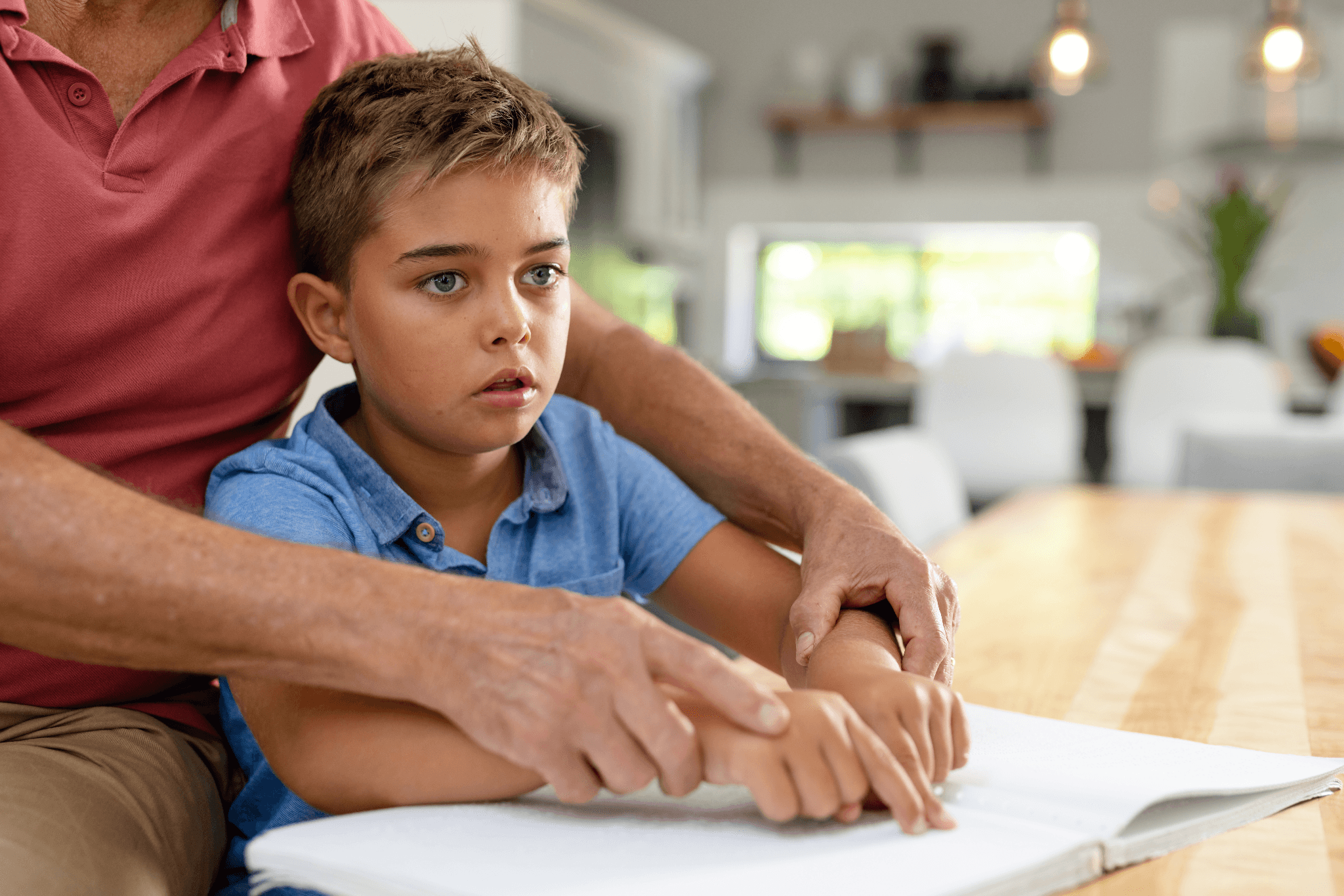

The Human-AI Fusion: Be My Eyes

Founded in 2015, Be My Eyes created something extraordinary, a global network connecting 750,000 blind or low-vision users with 8.3 million volunteers across 150 countries speaking 180 languages. Volunteers provide real-time visual assistance through smartphone video calls, embodying the best of human compassion.

Then, in 2023, Be My Eyes integrated OpenAI's GPT-4 to create Be My AI, an AI-powered virtual volunteer available 24/7. The results were nothing short of revolutionary.

Real-world impact:

- Users photograph refrigerator contents and receive not just item identification, but recipe suggestions and step-by-step cooking instructions

- The AI navigates complex environments like railway systems, providing specific route guidance with safety considerations

- 43 million user requests processed in 2024, threefold year-over-year growth in monthly AI sessions

- $6.1 million Series A+ funding in 2025, led by Enable Ventures

This isn't about replacing human volunteers; it's about instant access to assistance that scales infinitely while maintaining the warmth and context that makes help genuinely helpful.

2. Hearing and Communication: Amplifying Silent Voices

The Staggering Gap

1.5 billion people globally experience hearing loss, yet hearing aid production meets less than 10% of global demand. The economic cost of unaddressed hearing loss? A staggering $980 billion annually.

The AI Response

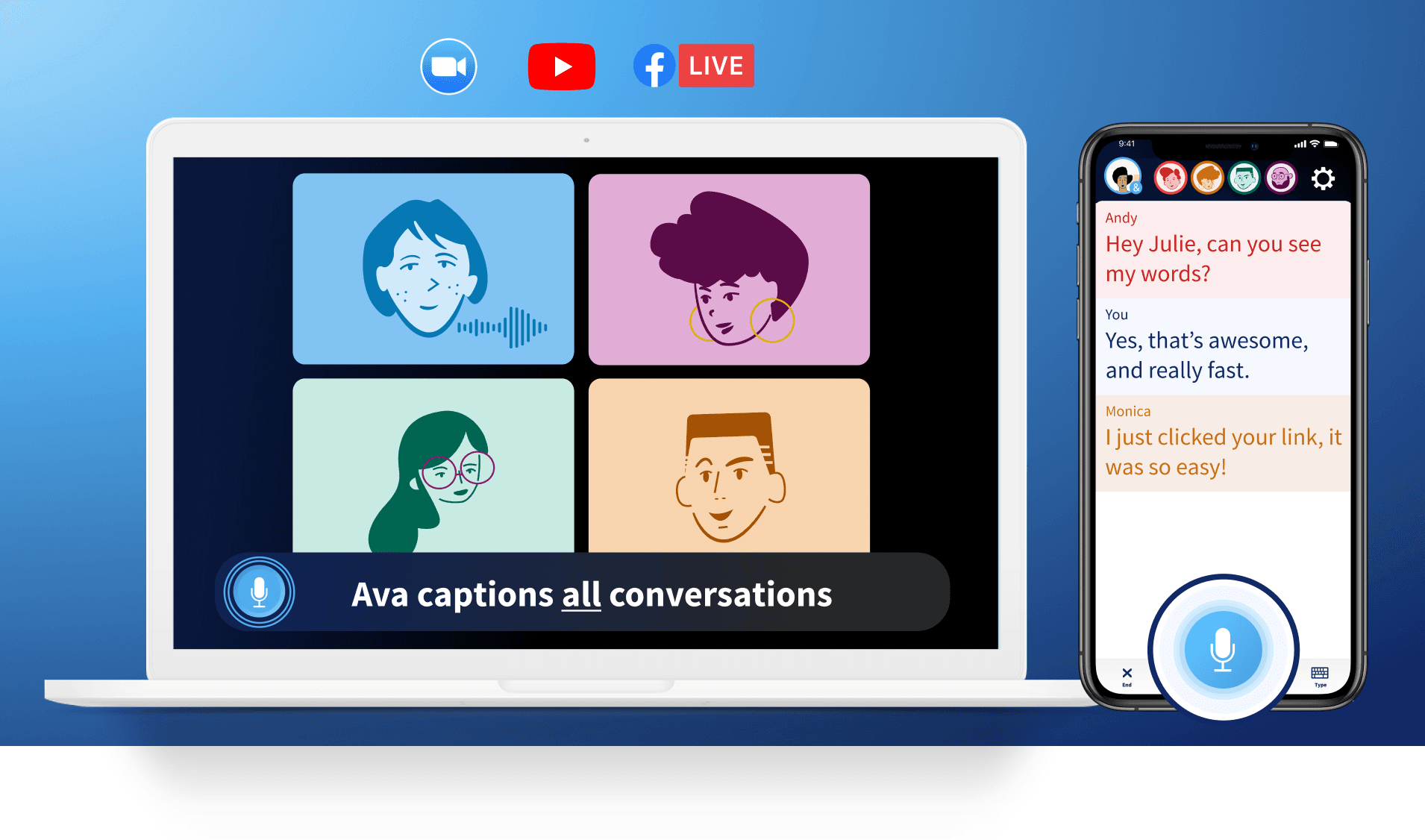

Real-time transcription and captioning services are democratising communication access at unprecedented scale:

Live Transcribe and Ava convert spoken words into text instantly, enabling participation in conversations, meetings, and public spaces that were previously inaccessible.

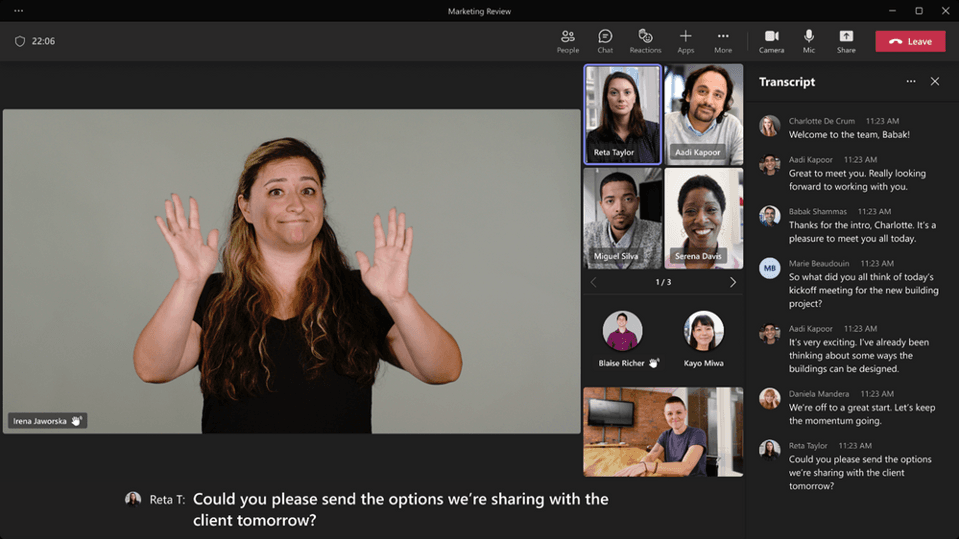

Microsoft Teams introduced features that identify when someone uses sign language, prominently featuring them as speakers in meetings, a simple but profound acknowledgment of communication diversity.

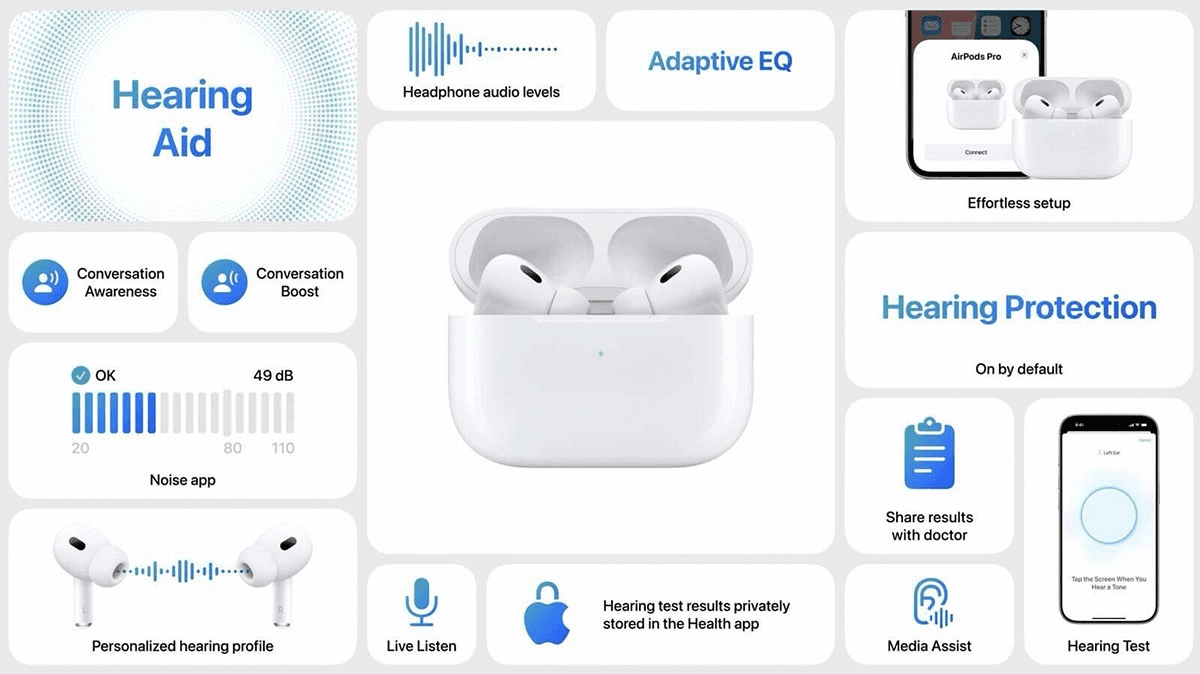

Apple's AirPods Pro 2 (announced September 2024) can now function as clinical-grade hearing aids for mild to moderate hearing loss, targeting a $13 billion market opportunity and transforming consumer electronics into medical devices.

Beyond Hearing: Giving Voice to the Voiceless

For individuals with speech impairments, AI-powered Augmentative and Alternative Communication (AAC) devices are revolutionary:

Voiceitt uses AI to recognise and adapt to non-standard or atypical speech patterns, acting as a real-time translator between the user and the world.

Voice cloning technology enables users who have lost their voice, through ALS, throat cancer, or other conditions, to communicate using synthetic versions of their original voice, preserving identity and personality in communication.

This isn't just about being heard; it's about being yourself when you speak.

3. Mobility and Navigation: Intelligence in Motion

The Access Crisis

Of the 80 million people who need wheelchairs globally, only 5-35% have access to one, depending on their country of residence. But having a wheelchair is just the beginning; navigation, independence, and autonomy present continuing challenges.

AI-Powered Movement

Google Maps and BlindSquare provide detailed, audio-based directions with information on accessible routes, obstacles, and points of interest, transforming urban environments from obstacle courses into navigable spaces.

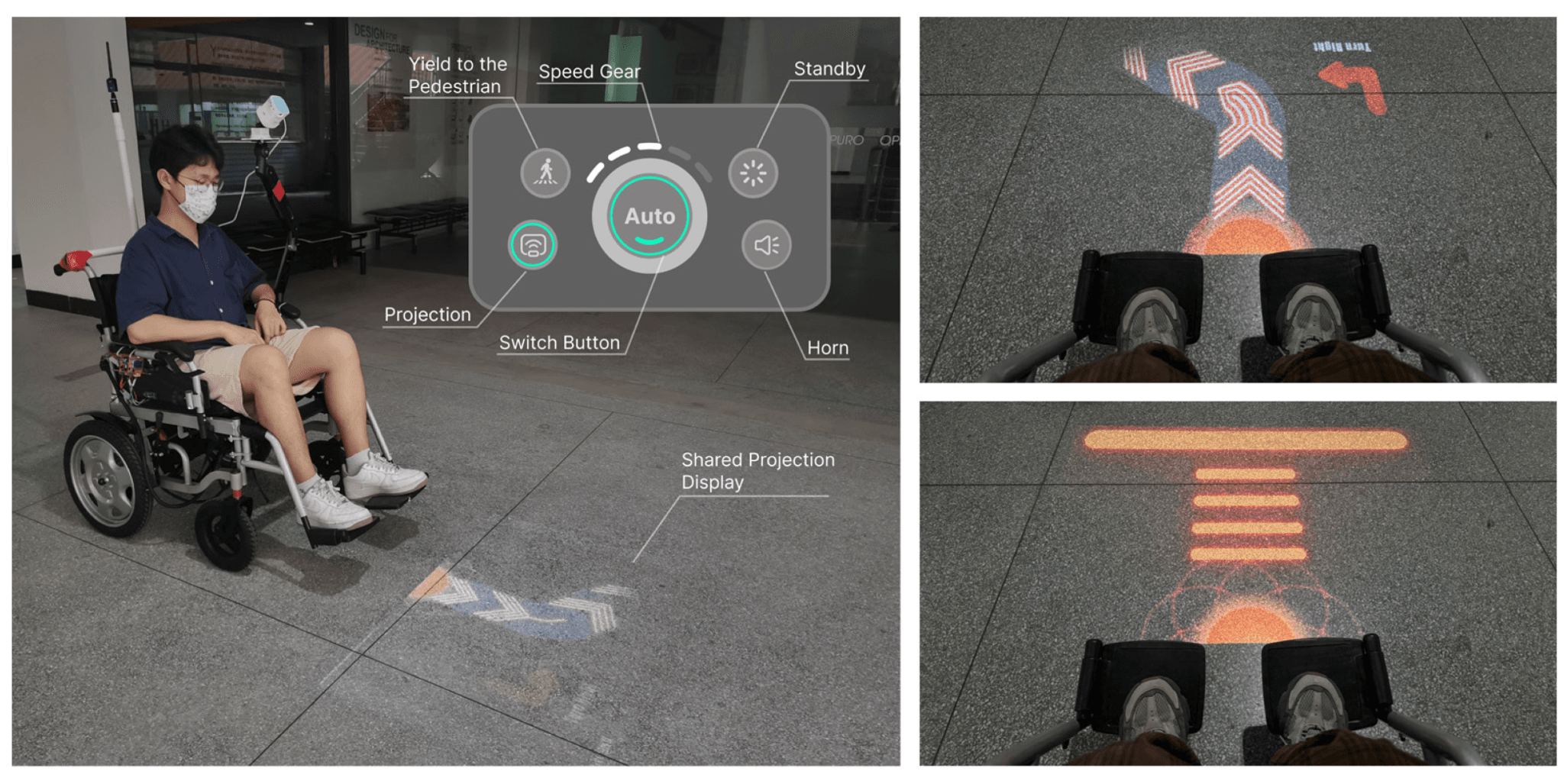

Intelligent wheelchairs integrate AI algorithms for more natural movement and autonomous navigation, learning user preferences and anticipating needs.

The Keeogo walking assistance device uses AI to learn individual user movements and provide real-time support, enhancing physical mobility through adaptive intelligence.

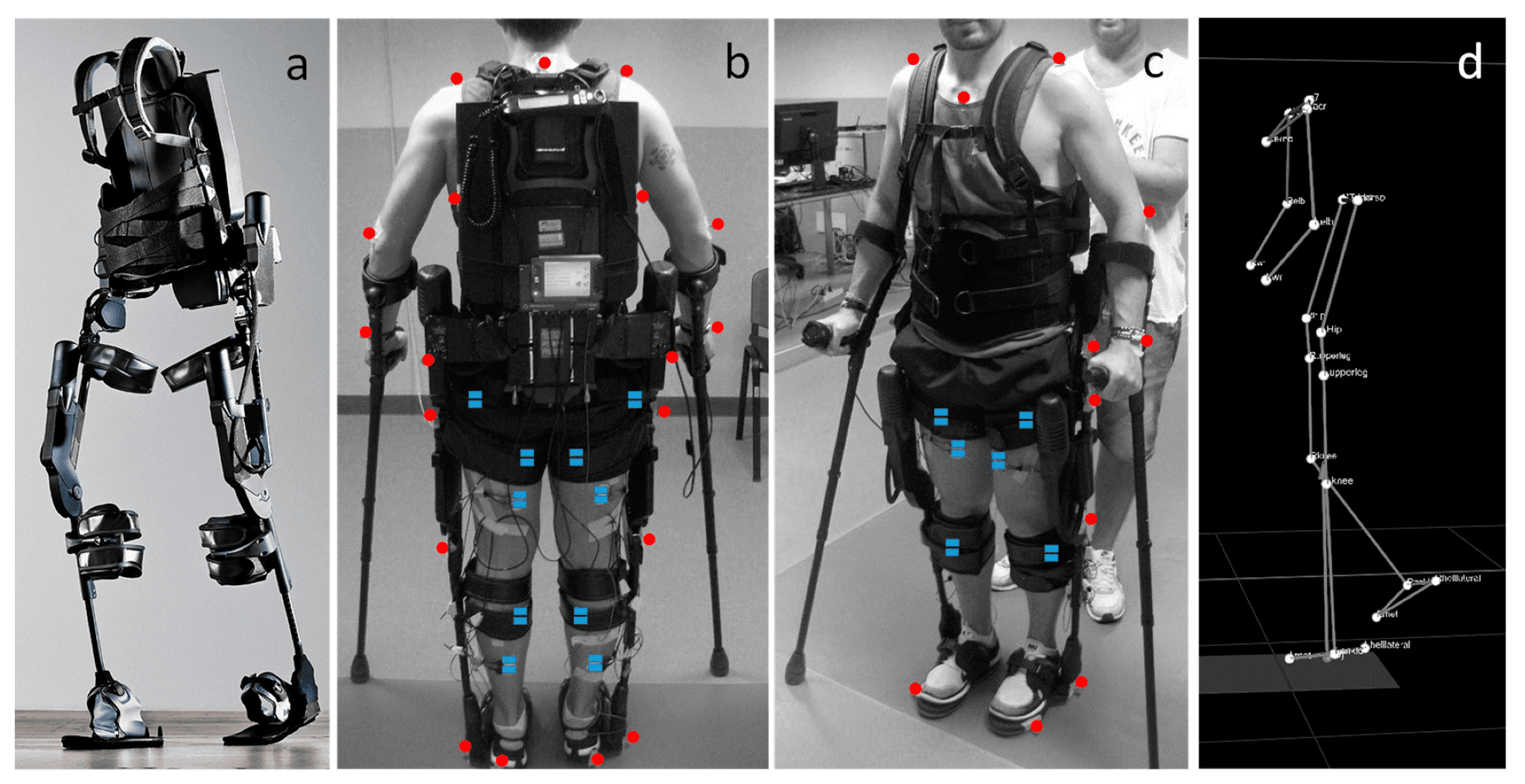

Advanced exoskeletons and robotic assistance devices introduced in 2024 are pushing the boundaries of what's possible, enabling individuals with severe mobility impairments to stand, walk, and perform daily tasks with unprecedented independence.

Smart Homes as Accessibility Platforms

Voice-controlled management of lighting, thermostats, door locks, and appliances through AI-powered smart home systems removes physical barriers within the home environment, arguably the space where independence matters most.

4. Cognitive and Learning Support: Extending the Mind

The Executive Function Challenge

For neurodivergent individuals and those with cognitive impairments, executive functioning, planning, organising, task management, time awareness, presents daily obstacles that can make seemingly simple activities overwhelming.

AI as Cognitive Scaffold

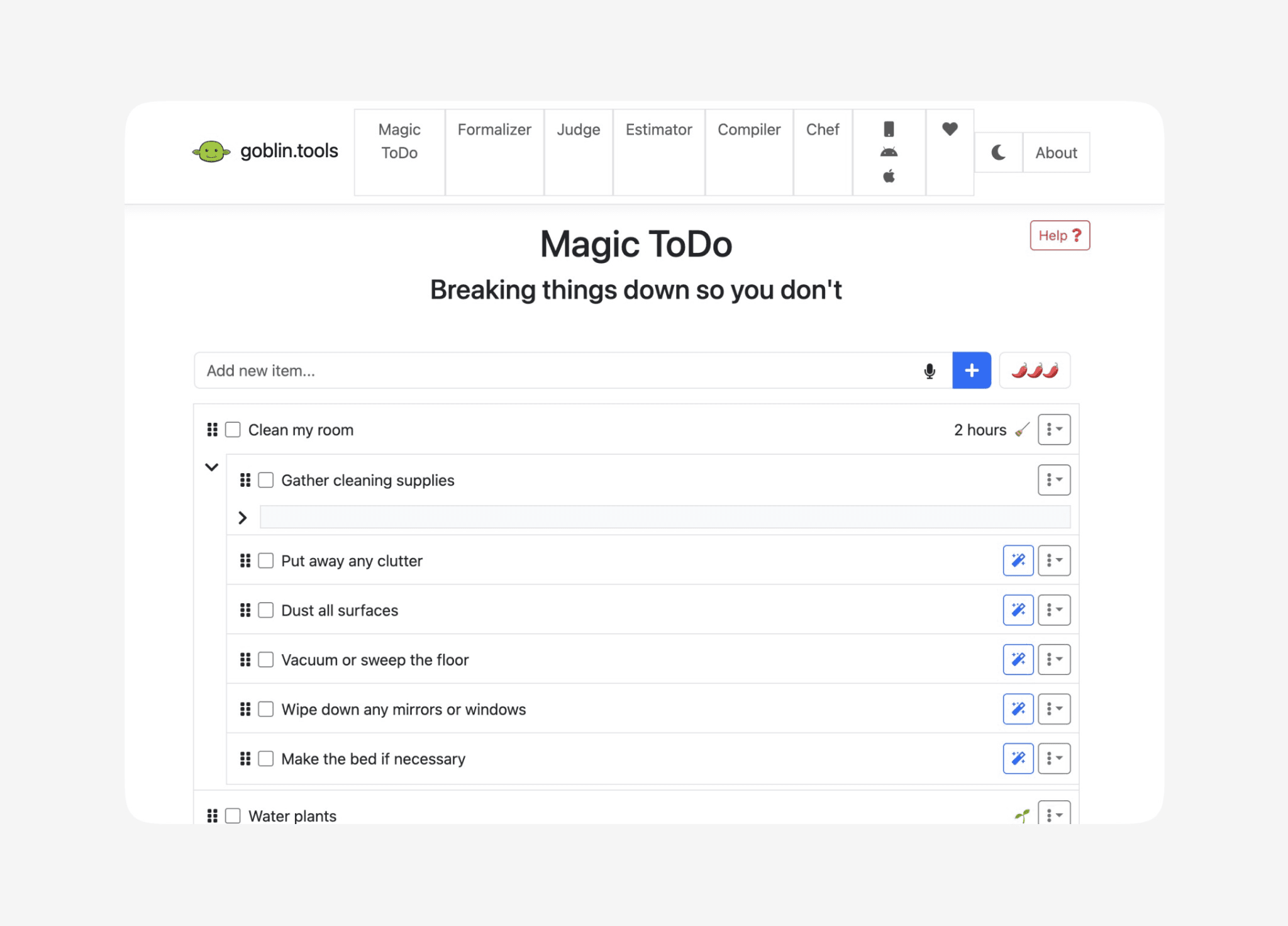

Goblin Tools and Vanderbilt University's Planning Assistant use AI to break down complex tasks into manageable steps, create schedules, and set intelligent reminders that adapt to user patterns.

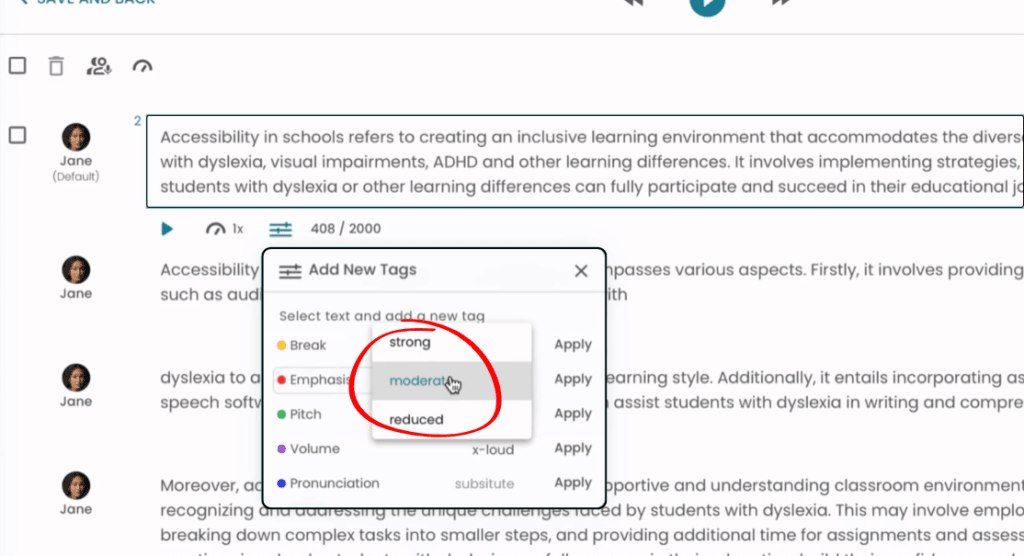

Microsoft's Immersive Reader and NaturalReader adjust font size, read text aloud, summarise documents, and check readability and grammar, supporting individuals with dyslexia, visual impairments, or processing differences.

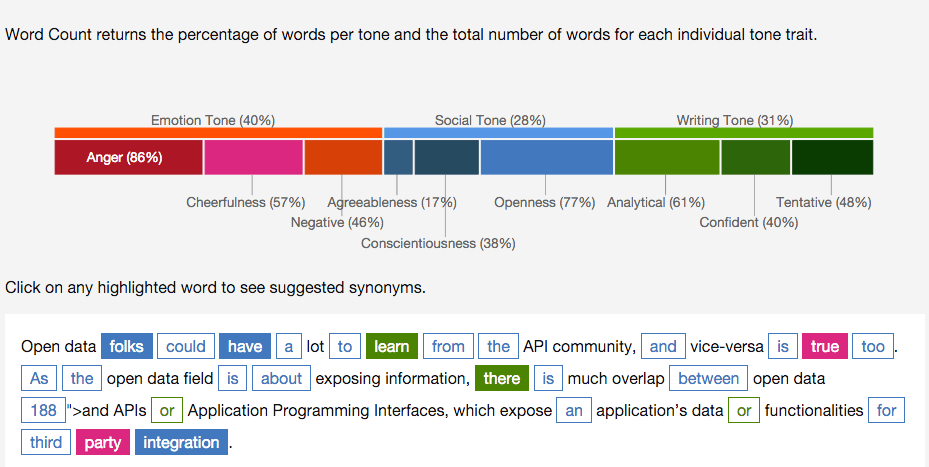

Emotional tone analysis tools help neurodivergent individuals interpret the tone of written messages or emails, reducing social anxiety and cognitive load in workplace environments where communication nuance can be a barrier to success.

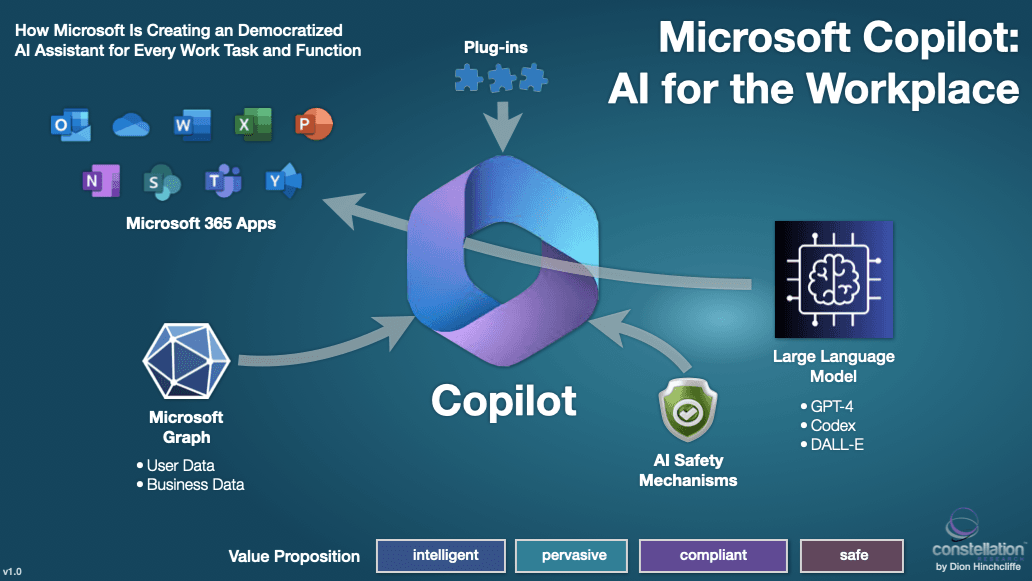

The Copilot Effect

Microsoft Copilot has emerged as particularly transformative in workplace settings. A 2024 EY study found that Copilot helped 76% of neurodiverse employees perform better at work by enhancing:

- Communication clarity and efficiency

- Memory recall and task tracking

- Focus and attention management

- Document creation and comprehension

This isn't about making neurodivergent workers "normal", it's about giving them tools that amplify their existing strengths while supporting areas of challenge.

5. The Multimodal Integration Revolution

Beyond Single-Domain Solutions

The most powerful assistive technologies don't serve just one disability domain, they integrate across multiple needs:

A user might employ:

- Vision AI to navigate to a meeting

- Real-time transcription to participate in the discussion

- Tone analysis to interpret interpersonal dynamics

- Task management AI to follow up on action items

- Voice synthesis to present their ideas with clarity

This seamless integration creates what was previously impossible: full participation in complex social and professional environments without constant accommodation requests or exhausting workarounds.

6. The Personalisation Paradigm

AI That Learns You

Unlike static assistive devices of the past, AI-powered tools continuously learn and adapt:

- Your speech patterns become more easily recognised

- Your navigation preferences inform route suggestions

- Your work style shapes task breakdown and reminders

- Your communication needs adjust interface complexity

The technology doesn't just serve you, it becomes attuned to you, creating a genuinely personalised assistance experience that improves over time.

The Numbers Behind the Transformation

Let's quantify the revolution:

- 43 million+ vision assistance requests processed annually by Be My AI alone

- 1.5 billion people with hearing loss now have access to real-time transcription

- 76% improvement in workplace performance for neurodiverse employees using AI tools

- 90-140% productivity gains when neurodivergent individuals work in roles aligned with their strengths, supported by AI

- $980 billion in economic costs from unaddressed hearing loss, addressable through AI solutions

The Accessibility Ecosystem: Six Domains, One Vision

The AI assistive technology revolution operates across six interconnected domains:

- Vision Support - Computer vision, scene description, contextual understanding

- Hearing & Communication - Real-time transcription, sign language recognition, voice synthesis

- Mobility & Navigation - Intelligent wheelchairs, exoskeletons, adaptive navigation

- Speech & Communication - AAC devices, voice cloning, atypical speech recognition

- Cognitive Support - Task management, executive function tools, adaptive learning

- Neurodiversity - Tone analysis, planning assistants, personalised interfaces

Each domain reinforces the others, creating an ecosystem of support that addresses the complex, multifaceted nature of human disability and neurodiversity.

Beyond Compensation: Enhancement and Amplification

Perhaps the most profound shift is philosophical. Early assistive technologies aimed to compensate for disabilities, to bring users "up to" typical functioning levels.

AI-powered assistive technologies do something different: they amplify existing capabilities and enable users to leverage their unique cognitive and physical profiles as strengths rather than deficits.

A neurodivergent individual doesn't need to become neurotypical to succeed. They need tools that work with their brain, not against it. A person with mobility impairments doesn't need to walk like everyone else. They need intelligent systems that make their chosen mode of movement as efficient and independent as possible.

The Implementation Gap: From Technology to Access

The technology exists. The benefits are measurable. The economic case is compelling. Yet significant barriers remain:

- Cost - Many AI-powered solutions remain expensive

- Awareness - Users don't know what's available

- Training - Effective use requires learning and support

- Infrastructure - Internet access, smartphone ownership, and technical literacy vary globally

- Design - Not all AI tools are designed with accessibility from the ground up

Bridging these gaps requires coordinated effort across technology companies, governments, healthcare systems, educational institutions, and advocacy organisations.

The Path Forward: What Comes Next

The AI assistive technology revolution is accelerating:

- Multimodal AI models will provide even richer contextual understanding

- Edge computing will enable real-time processing without internet dependence

- Brain-computer interfaces will create direct neural pathways for control and communication

- Personalised AI agents will serve as persistent assistants that know individual needs intimately

- Open-source solutions will democratise access beyond proprietary platforms

But technology alone isn't enough. The ultimate barrier is not technical, it's social and systemic.

Technology as Liberation

AI-powered assistive technologies represent more than innovation, they represent liberation.

Liberation from dependence. Liberation from isolation. Liberation from barriers that separate human potential from human achievement.

When Be My Eyes processes 43 million requests in a year, those aren't just transactions, they're moments of independence, participation, and dignity. When a neurodivergent employee performs 76% better with AI support, that's not just productivity, it's self-actualisation. When someone with a speech impairment uses voice cloning to communicate in their own voice, that's not just technology, it's identity reclaimed.

The barriers are breaking down. The question is whether we'll accelerate that process through intentional investment, inclusive design, and universal access, or whether we'll allow the digital divide to create new forms of exclusion even as old barriers fall.

The technology is ready. The need is urgent. The opportunity is extraordinary.

What happens next depends on us.

Key Impact Metrics:

- 43M+ vision assistance requests processed (2024)

- 1.5B people with hearing loss served

- 76% workplace performance improvement for neurodiverse employees

- 90-140% productivity gains with proper AI support

- $980B economic opportunity in hearing solutions alone

Next in this series: Part 3 will explore workplace transformation, examining how organisations are reimagining employment through AI-powered inclusive practices.

previous

The Sound Sculptors: 100 Musicians Who Transformed How Humanity Hears

next

The Multidisciplinary Titans: 100 Artists Who Transcended Medium

Share this

Dinis Guarda

Author

Dinis Guarda is an author, entrepreneur, founder CEO of ztudium, Businessabc, citiesabc.com and Wisdomia.ai. Dinis is an AI leader, researcher and creator who has been building proprietary solutions based on technologies like digital twins, 3D, spatial computing, AR/VR/MR. Dinis is also an author of multiple books, including "4IR AI Blockchain Fintech IoT Reinventing a Nation" and others. Dinis has been collaborating with the likes of UN / UNITAR, UNESCO, European Space Agency, IBM, Siemens, Mastercard, and governments like USAID, and Malaysia Government to mention a few. He has been a guest lecturer at business schools such as Copenhagen Business School. Dinis is ranked as one of the most influential people and thought leaders in Thinkers360 / Rise Global’s The Artificial Intelligence Power 100, Top 10 Thought leaders in AI, smart cities, metaverse, blockchain, fintech.

More Articles

World Labs: What Fei-Fei Li's Spatial Intelligence Platforms Means for the Future of AI

Are the Laws of the Universe Discovered or Imposed?

Chinese New Year 2026: Galloping Into the Year of the Fire Horse

The Machiavellian Principles Applied In An AI Hallucination Time (Part 5)

The Machiavellian Principles Applied In An AI Hallucination Time (Part 4)