The Chronological Timeline of AI and Computing History

Dinis GuardaAuthor

Mon Mar 17 2025

The evolution of artificial intelligence and computing represents one of humanity's most remarkable technological journeys. From the earliest mechanical calculators to today's sophisticated AI systems, this timeline charts the pivotal moments, breakthrough innovations, and visionary minds that transformed theoretical concepts into the digital reality we experience today. This article will explore the fascinating chronology of computational advancements that have revolutionised how we live, work, and understand the world around us.

The evolution of artificial intelligence and computing represents one of humanity's most remarkable technological journeys. From the earliest mechanical calculators to today's sophisticated AI systems, this timeline charts the pivotal moments, breakthrough innovations, and visionary minds that transformed theoretical concepts into the digital reality we experience today. This article will explore the fascinating chronology of computational advancements that have revolutionised how we live, work, and understand the world around us.

The Dream of Advanced Intelligence and Thinking Machines

For millennia, humans have been captivated by the possibility of creating entities that could think like us. From the ancient Greek myths of Hephaestus's automata to Leonardo da Vinci's mechanical knight, the dream of crafting intelligent machines has persisted throughout human history. This enduring fascination speaks to something profound in our nature: the desire to understand our own minds by recreating them.

Artificial intelligence, as a formal field of study, emerged in the mid-20th century, but its foundations were laid much earlier through centuries of developments in mathematics, philosophy, and computing. The journey from mathematical logic to modern deep learning systems represents one of humanity's most ambitious intellectual endeavors—an attempt to understand and replicate intelligence itself.

This article traces the full arc of AI's development, from its philosophical and mathematical prerequisites to the sophisticated systems that now permeate our daily lives. We'll explore how AI evolved from abstract theoretical concepts to practical technologies that recognize speech, translate languages, play complex games, create art, and assist in scientific discovery. Along the way, we'll meet the visionaries, mathematicians, computer scientists, and entrepreneurs who transformed AI from science fiction into reality.

The history of artificial intelligence is not a linear progression but rather a series of advances, setbacks, and paradigm shifts. Periods of rapid progress have been followed by "AI winters" of reduced funding and diminished expectations. Competing visions of how to achieve machine intelligence have led to different research traditions, from symbolic reasoning to neural networks, from expert systems to statistical learning approaches.

Today, AI stands at a pivotal moment in its history. Recent breakthroughs in deep learning, reinforcement learning, and large language models have delivered capabilities that would have seemed impossible just a decade ago. As AI systems become increasingly integrated into society, understanding the field's history becomes essential for anyone seeking to comprehend its present state and potential future.

This article will highlight the historical benchmarks that have defined the field, introduce the personalities who shaped it, examine the products that brought AI into everyday life, and explore the scientific breakthroughs that made it all possible. In doing so, we aim to provide a comprehensive understanding of how artificial intelligence—perhaps humanity's most transformative technology—came to be.

The Mathematical and Computational Foundations

Early Mathematical Concepts

The history of artificial intelligence begins not with computers or robots, but with fundamental developments in mathematics and logic. The ancient Greeks, particularly Aristotle in the 4th century BCE, established the first formal system of logical reasoning. His syllogistic logic provided a framework for deductive reasoning that would influence thought for the next two millennia

The 17th century brought revolutionary changes to mathematical thinking. Gottfried Wilhelm Leibniz (1646-1716) envisioned a universal language of reasoning, which he called the "characteristica universalis," and a "calculus ratiocinator" that would mechanize thought itself. Though Leibniz never fully realized these ambitious ideas, they presaged later developments in mathematical logic and computing.

The 19th century saw mathematics expand in directions crucial for the later development of AI. George Boole (1815-1864) developed Boolean algebra, which reduced logical reasoning to a system of mathematical equations. Boolean logic would eventually become the foundation of digital circuit design and computer programming. Simultaneously, Augustus De Morgan formulated De Morgan's laws, which established important rules for logical reasoning.

Gottlob Frege's "Begriffsschrift" (1879) introduced predicate logic, greatly expanding the expressive power of formal logic beyond Boole's system. This was followed by Bertrand Russell and Alfred North Whitehead's monumental "Principia Mathematica" (1910-1913), which attempted to derive all of mathematics from logical principles. Though Kurt Gödel's incompleteness theorems (1931) would later prove the limitations of such formal systems, these works established the mathematical foundation upon which computer science would be built.

From Computation to Computing Machines

The concept of computation itself has ancient roots. The abacus, dating back at least 4,000 years, represents one of humanity's earliest computational devices. More sophisticated mechanical calculators emerged in the 17th century, with Blaise Pascal's Pascaline (1642) and Leibniz's Stepped Reckoner (1673) demonstrating that mathematical operations could be mechanized.

Charles Babbage (1791-1871) conceived of far more ambitious computing machines. His Difference Engine, designed in the 1820s, was intended to automatically calculate polynomial functions. More significantly, his Analytical Engine, designed in the 1830s but never completed, incorporated many features of modern computers: a "store" (memory), a "mill" (processor), and the ability to be programmed using punched cards. Ada Lovelace (1815-1852), who wrote extensive notes on the Analytical Engine, is credited with creating the first algorithm intended for machine execution, making her, in a sense, the world's first computer programmer.

The mathematical theory of computation advanced significantly with Alan Turing's 1936 paper "On Computable Numbers," which introduced the concept of a universal computing machine (later known as a Turing machine). This theoretical construct established the mathematical limits of computation and provided a conceptual blueprint for general-purpose computers.

The practical development of electronic computers accelerated during World War II. John Atanasoff and Clifford Berry built the first electronic digital computer, the Atanasoff-Berry Computer, in 1942. The British Colossus computers, developed to decrypt German messages, became operational in 1943. In the United States, the ENIAC (Electronic Numerical Integrator and Computer), completed in 1945, represented the first general-purpose electronic digital computer.

John von Neumann's architecture for stored-program computers, described in 1945, allowed programs to be stored in the same memory as data. This innovation, first implemented in the Manchester Baby computer (1948) and the EDSAC (1949), formed the basis for virtually all subsequent computer designs.

The development of high-level programming languages, beginning with FORTRAN (1957), LISP (1958), and COBOL (1959), made computers more accessible to a wider range of researchers and laid the groundwork for the first AI programs. LISP, developed by John McCarthy, would become particularly important for AI research due to its flexibility in handling symbolic expressions.

These mathematical and computational advances provided the essential foundation upon which artificial intelligence would be built. Without Boolean logic, there would be no digital computers; without Turing's theoretical insights, there would be no concept of universal computation; without stored-program computers and high-level programming languages, there would be no practical way to implement AI algorithms. The history of AI is thus inseparably intertwined with the broader history of mathematics and computing.

The Birth of Artificial Intelligence (1943-1956)

The field of artificial intelligence emerged gradually from converging lines of research in neurology, mathematics, and electrical engineering. In 1943, Warren McCulloch and Walter Pitts published their groundbreaking paper "A Logical Calculus of the Ideas Immanent in Nervous Activity," which proposed a mathematical model of neural networks. This paper demonstrated how simple computational units, modeled after neurons, could perform logical operations. Though far from creating actual intelligence, their work established the crucial connection between computation and neural processes.

Donald Hebb's 1949 book "The Organization of Behavior" introduced the concept now known as Hebbian learning, suggesting that neural pathways strengthen with repeated use. His ideas about how neurons modify their connections through experience would later influence the development of learning algorithms for artificial neural networks.

The Dartmouth Conference of 1956 marked the official birth of artificial intelligence as a distinct field of study. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, this summer workshop brought together researchers interested in "the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it." It was McCarthy who proposed the name "artificial intelligence" for this new field.

The Dartmouth Conference participants included many figures who would become pioneers in AI research, including Arthur Samuel, Oliver Selfridge, Ray Solomonoff, and Trenchard More. Though the workshop did not produce the breakthroughs its organizers had hoped for, it established AI as a legitimate field of research and created a community of researchers dedicated to the goal of creating machine intelligence.

The Golden Years: Early Enthusiasm and Achievements (1956-1973)

The period following the Dartmouth Conference saw rapid progress and boundless optimism in AI research. Herbert Simon and Allen Newell's Logic Theorist (1956) was able to prove mathematical theorems, and their subsequent General Problem Solver (1957) attempted to simulate human problem-solving processes. In 1957, Simon boldly predicted that "within ten years a digital computer will be the world's chess champion" and that “within ten years a digital computer will discover and prove an important new mathematical theorem.”

John McCarthy developed the LISP programming language at MIT in 1958, providing a powerful tool for AI research that would remain influential for decades. That same year, he published "Programs with Common Sense," describing the Advice Taker, a hypothetical program that could learn from experience—an early conception of what we would now call a general AI system.

Frank Rosenblatt's perceptron, a simple type of neural network, generated excitement when it was first demonstrated in 1958. The perceptron could learn to recognize simple patterns, and Rosenblatt predicted that an advanced version would eventually "be able to recognize people and call out their names" and even "cross the street safely." Though these predictions proved premature, the perceptron represented an important step in machine learning.

The 1960s saw the development of specialized AI systems capable of impressive feats in narrow domains. Daniel Bobrow's STUDENT program (1964) could solve algebra word problems stated in natural language. Joseph Weizenbaum's ELIZA (1966) simulated a psychotherapist by parsing and reformulating users' statements, creating a surprisingly compelling illusion of understanding. Terry Winograd's SHRDLU (1970) could understand and execute complex commands within its limited "blocks world" environment.

Government funding for AI research was abundant during this period, particularly from DARPA (Defense Advanced Research Projects Agency) in the United States. Dedicated AI laboratories were established at MIT, Stanford, and other institutions, creating hubs for research and innovation.

Yet even amid this progress, the limitations of early AI systems were becoming apparent. In 1969, Marvin Minsky and Seymour Papert published "Perceptrons," which demonstrated the mathematical limitations of single-layer neural networks. Though their analysis did not apply to multi-layer networks, it contributed to a decline in neural network research that would last for more than a decade.

The First AI Winter and the Rise of Expert Systems (1973-1980)

The initial enthusiasm for AI research gave way to a period of reduced funding and diminished expectations, commonly known as the "first AI winter." Several factors contributed to this downturn. AI systems of the era proved brittle—they could perform impressively in carefully constrained environments but failed when faced with real-world complexity. The translation of research prototypes into practical applications proved more difficult than anticipated. James Lighthill's influential 1973 report to the British Science Research Council criticized the failure of AI to achieve its "grandiose objectives" and led to severe cuts in AI research funding in the UK.

In the United States, DARPA's director became frustrated with the lack of practical results from AI research and redirected funding toward more immediately applicable projects. The end of the Vietnam War also reduced the military's interest in funding basic research without clear defense applications.

Despite this climate of skepticism, important work continued. The concept of "expert systems"—programs that captured the specialized knowledge of human experts in specific domains—emerged as a promising approach. Edward Feigenbaum, often called the "father of expert systems," led the development of DENDRAL (1965-1983), which could identify chemical compounds from mass spectrometer data, and MYCIN (1972), which could diagnose infectious blood diseases.

Expert systems represented a shift in AI strategy. Rather than attempting to create general-purpose intelligence, researchers focused on encoding the specific knowledge of human experts in narrow domains. This approach proved more immediately practical and helped revive interest in AI applications.

The Second Spring: Expert Systems and the Fifth Generation Project (1980-1987)

The commercial success of expert systems in the early 1980s led to renewed optimism and investment in AI. Companies began developing and deploying expert systems for various applications, from configuring computer systems (XCON at Digital Equipment Corporation) to assisting with geological exploration (Dipmeter Advisor at Schlumberger).

In 1981, Japan launched its ambitious "Fifth Generation Computer Systems" project, a government-industry collaboration aimed at developing intelligent computers running on parallel processing hardware. This ten-year plan, with its substantial budget and bold objectives, raised concerns in the United States and Europe about falling behind in AI research.

In response, the UK established the Alvey Programme in 1983, and the European Economic Community launched the European Strategic Program on Research in Information Technology (ESPRIT) the same year. In the United States, the Defense Advanced Research Projects Agency (DARPA) increased its funding for AI research, and corporations established their own AI research laboratories.

During this period, new approaches to machine learning gained traction. In 1982, John Hopfield described a form of neural network (the Hopfield network) that could serve as content-addressable memory. In 1986, David Rumelhart, Geoffrey Hinton, and Ronald Williams published their paper on backpropagation, providing an effective method for training multi-layer neural networks. This work would later prove crucial for the deep learning revolution of the 2010s.

The Second AI Winter (1987-1993)

The bubble of enthusiasm around expert systems and the Fifth Generation project eventually burst, leading to what is known as the "second AI winter." Expert systems proved expensive to create and maintain, and their brittleness limited their usefulness outside narrow domains. The Fifth Generation project failed to achieve many of its ambitious goals, though it did advance parallel computing.

As desktop computers became more powerful in the late 1980s, they began to outperform the specialized AI hardware that companies like Symbolics and Lisp Machines Inc. had developed. This undermined the business model of these AI-focused computer manufacturers and led to their decline.

Funding for AI research contracted once again, and the field faced another period of skepticism and reduced expectations. Yet even during this difficult period, researchers continued to make important advances, particularly in machine learning and robotics.

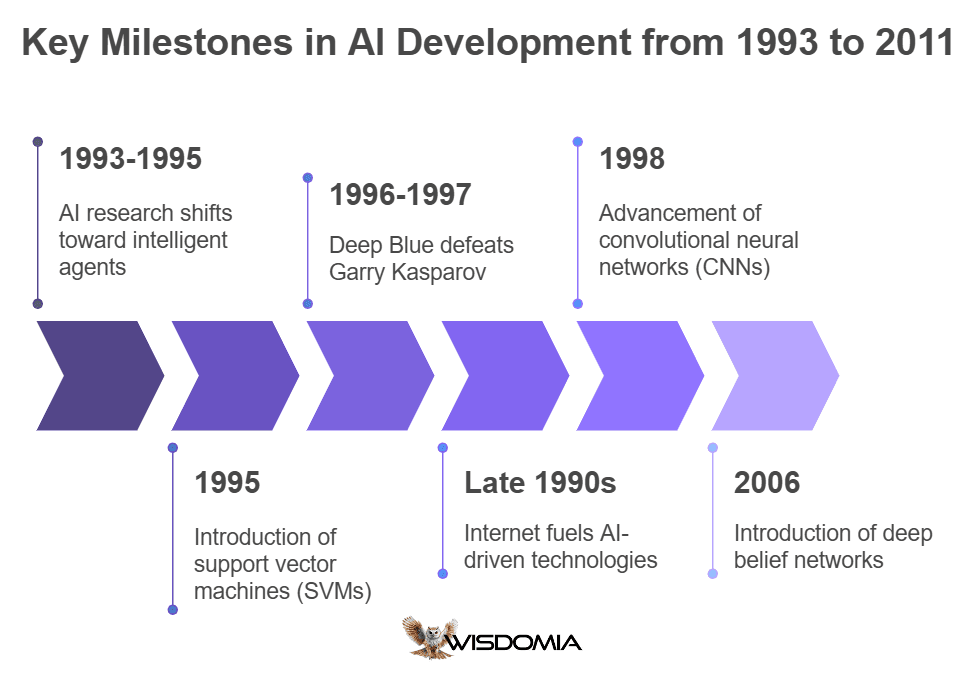

The Emergence of Intelligent Agents and the Return of Neural Networks (1993-2011)

The mid-1990s saw AI research shift toward the concept of "intelligent agents"—software entities that could perceive their environment, reason, and take actions to achieve specific goals. This agent-oriented approach, combined with advances in probability theory and decision theory, led to the development of more robust and adaptive AI systems.

The field also benefited from the explosive growth of the internet, which provided vast amounts of data and created demand for technologies like search engines and recommendation systems that could leverage AI techniques. In 1997, IBM's Deep Blue defeated world chess champion Garry Kasparov, demonstrating that computers could outperform humans in specific complex tasks.

Machine learning techniques, particularly those based on statistical approaches, gained prominence during this period. Support vector machines, introduced by Vladimir Vapnik and Corinna Cortes in 1995, proved effective for classification tasks. Reinforcement learning, developed by Richard Sutton and Andrew Barto among others, enabled systems to learn through trial and error.

Neural networks, which had fallen out of favor during the previous AI winter, began a gradual comeback. Yann LeCun's work on convolutional neural networks for image recognition, beginning in the late 1980s, demonstrated the potential of deep learning approaches. In 2006, Geoffrey Hinton published his research on deep belief networks, showing how neural networks with many layers could be effectively trained.

The Deep Learning Revolution and Beyond (2011-2025)

The true breakthrough for deep learning came in 2012, when a team led by Geoffrey Hinton won the ImageNet competition by a substantial margin using a deep convolutional neural network. This watershed moment demonstrated the power of deep learning for computer vision tasks and sparked renewed interest in neural network approaches.

The subsequent years saw rapid advances in deep learning across multiple domains. Recurrent neural networks with long short-term memory (LSTM) units, developed by Sepp Hochreiter and Jürgen Schmidhuber in the 1990s but only widely adopted in the 2010s, proved effective for tasks involving sequential data, such as speech recognition and language translation.

In 2014, Ian Goodfellow and his colleagues introduced generative adversarial networks (GANs), which enabled systems to create realistic images and other content. The following year, Google DeepMind's AlphaGo defeated champion Go player Lee Sedol, accomplishing a goal that many experts had thought was decades away.

The development of transformer architectures, introduced in the 2017 paper "Attention Is All You Need" by Vaswani et al., revolutionized natural language processing. Transformers formed the basis for models like BERT (2018), GPT (2018), and their successors, which demonstrated unprecedented language understanding and generation capabilities.

The period from 2020 to 2025 saw the scaling of these models to billions of parameters, creating systems capable of sophisticated language understanding, code generation, and even multimodal processing across text, images, and audio. OpenAI's GPT-3 (2020) and GPT-4 (2023), Anthropic's Claude (2022-2024), Google's Gemini (2023), and Meta's Llama series (2023-2024) pushed the boundaries of what large language models could achieve.

These advances in AI technology led to the rapid deployment of AI systems across industries, from healthcare and finance to education and entertainment. Self-driving vehicles, virtual assistants, recommender systems, and other AI applications became increasingly integrated into everyday life.

As AI capabilities expanded, so did concerns about their societal impact. Issues of bias in AI systems, privacy implications, potential job displacement, and the ethical considerations of increasingly autonomous systems became subjects of intense debate. Governments around the world began developing regulatory frameworks for AI, attempting to balance innovation with public safety and welfare.

By 2025, artificial intelligence had transformed from a niche academic discipline to a general-purpose technology reshaping virtually every aspect of human society. The dream of creating thinking machines, which had captivated humans for millennia, had evolved into a complex reality with profound implications for the future of humanity.

Influential Voices: Quotes from AI's Leading Minds

The journey of artificial intelligence has been shaped by brilliant thinkers whose words have illuminated both possibilities and perils. Their insights reveal the evolving perspectives on this transformative technology:

"I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted." - Alan Turing, computing pioneer who laid the theoretical foundations for AI

"AI is the new electricity. Just as electricity transformed almost everything 100 years ago, today I actually have a hard time thinking of an industry that I don't think AI will transform in the next several years." - Andrew Ng, co-founder of Google Brain and former chief scientist at Baidu

"We need to be super careful with AI. It is potentially more dangerous than nukes." - Elon Musk, highlighting concerns about advanced artificial intelligence

"The development of full artificial intelligence could spell the end of the human race... it would take off on its own, and re-design itself at an ever-increasing rate." - Stephen Hawking, expressing caution about uncontrolled AI development

"I'm not worried about machines that think like humans. I'm more concerned about people who think like machines." - Fei-Fei Li, AI researcher and co-director of Stanford's Human-Centered AI Institute

"A child playing with matches doesn't understand why you're scolding them because they don't understand the risk of burning the house down." - Geoffrey Hinton, "godfather of deep learning," on the unforeseen dangers of AI

"The biggest existential threat is probably AI. I'm increasingly inclined to think there should be some regulatory oversight, maybe at the national and international level." - Elon Musk, advocating for AI regulation

"What we're seeing now with generative AI and other AI technologies is that we're getting into a period of time where it's going to be more important than ever that we have a diverse set of voices." - Dario Amodei, CEO of Anthropic

Conclusion: The Unfolding Journey of AI and Computing

As we reflect on this chronological timeline of AI and computing history, we witness not merely a series of technological innovations, but the unfolding of humanity's quest to extend our cognitive capabilities beyond natural limits. From Charles Babbage's Analytical Engine to today's sophisticated deep learning systems, each advancement has built upon previous discoveries, accelerating our journey into new frontiers of possibility. While we cannot predict with certainty where these technologies will take us next, the trajectory suggests transformative impacts across every sector of society.

The story of AI and computing is ultimately a human story—one of curiosity, creativity, and persistent problem-solving in the face of seemingly insurmountable challenges. As we stand at this pivotal moment in technological evolution, the lessons of this historical timeline remind us that responsible stewardship and inclusive development will be crucial in shaping an AI future that amplifies human potential and addresses our most pressing global challenges.

Read More:

seeing an owl at night spiritual meaning

how to explain democrat vs republican to a child

how has the development of technology positively affected our wellness?

previous

Top Quotes from the World’s Greatest Titans: Wisdom from Leaders, Innovators & Visionaries

next

The History of Gaming and Its Technological Advancements

Share this

Dinis Guarda

Author

Dinis Guarda is an author, entrepreneur, founder CEO of ztudium, Businessabc, citiesabc.com and Wisdomia.ai. Dinis is an AI leader, researcher and creator who has been building proprietary solutions based on technologies like digital twins, 3D, spatial computing, AR/VR/MR. Dinis is also an author of multiple books, including "4IR AI Blockchain Fintech IoT Reinventing a Nation" and others. Dinis has been collaborating with the likes of UN / UNITAR, UNESCO, European Space Agency, IBM, Siemens, Mastercard, and governments like USAID, and Malaysia Government to mention a few. He has been a guest lecturer at business schools such as Copenhagen Business School. Dinis is ranked as one of the most influential people and thought leaders in Thinkers360 / Rise Global’s The Artificial Intelligence Power 100, Top 10 Thought leaders in AI, smart cities, metaverse, blockchain, fintech.

More Articles

Are the Laws of the Universe Discovered or Imposed?

Chinese New Year 2026: Galloping Into the Year of the Fire Horse

The Machiavellian Principles Applied In An AI Hallucination Time (Part 5)

The Machiavellian Principles Applied In An AI Hallucination Time (Part 4)

Why Is There Something Rather Than Nothing?