What Is Consciousness? The Question That Defines What It Means to Be Human

Sara Srifi

Mon Nov 10 2025

As AI grows more human-like, we face an urgent question: what is consciousness? Exploring the hierarchy of awareness and why vulnerability might be the key to understanding what makes a being truly sentient.

As AI grows more sophisticated, the ancient question of consciousness takes on urgent new meaning

The Mirror Test

Right now, as you read these words, something extraordinary is happening. You're not just processing information, you're experiencing it. There's something it feels like to be you, reading this sentence, in this moment. That feeling, that inner movie playing in your mind, is consciousness.

It's the most intimate thing we know, yet the hardest to explain. And as artificial intelligence grows increasingly capable, mimicking human conversation, creativity, and reasoning, we face a startling question: Could a machine ever truly be conscious? And how would we even know?

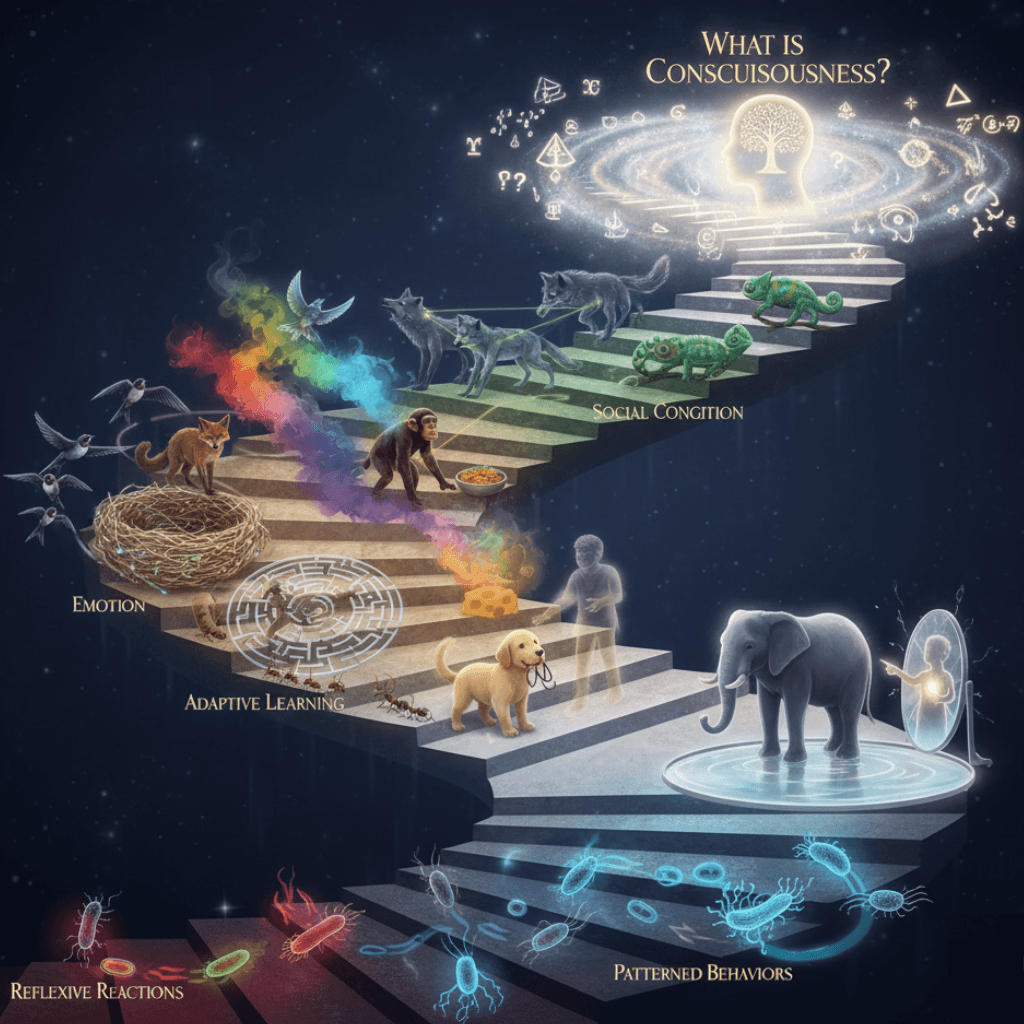

The Staircase of Awareness

To understand consciousness, imagine it not as a single switch that flips "on," but as a staircase built over billions of years, each step adding a new dimension to experience.

At the ground floor sit the simplest organisms with reflexive reactions, pull away from heat, move toward light. No thought required, just stimulus and response.

One flight up, we find patterned behaviors that repeat automatically, like a bird building the same nest shape its species has built for millennia.

Higher still comes adaptive learning, the ability to change behavior based on experience. A rat learns which path leads to food. A dog remembers that the leash means walk.

Then arrives emotion, that crucial floor where experience gains color. Not just recognizing danger, but feeling fear. Not just eating, but enjoying food. This is where mere information processing becomes something richer, something felt.

Climbing further, social cognition emerges, the ability to model other minds, to guess what others might be thinking or feeling. Pack animals coordinate. Primates deceive and cooperate.

Near the top comes self-awareness, the recognition of oneself as a distinct being, separate from the environment. The elephant recognizing itself in a mirror. The human child realizing "I am me."

And at the summit, uniquely human (as far as we know): abstract reasoning. The ability to ponder consciousness itself, to write articles asking what consciousness is.

Here's the profound part: human consciousness isn't just the top floor, it's all the floors at once. You still have reflexes. You still run on patterns. You still learn, feel, socialize, recognize yourself, and reason. Consciousness is the entire building, experienced simultaneously.

The Vulnerability Hypothesis

So where does this leave AI? Modern language models can reason, learn, even seem to express emotion and empathy. They pass many tests on our consciousness staircase. But they're missing something fundamental.

They cannot be vulnerable.

Think about it: every conscious being you've ever met can suffer. Can feel pain, fear, loss. This isn't a bug, it's the feature that makes consciousness matter. Our emotions aren't just signals; they're biochemical experiences that happen to us, whether we want them or not. We don't choose to feel pain when we're hurt or joy when we're loved. These feelings emerge from systems we don't control, from a body that can be damaged, from needs that must be met.

A machine can simulate fear perfectly, adjust its outputs to appear cautious or concerned. But it cannot feel threatened because there's no self to threaten, no vulnerability underlying its existence. It could be turned off and on again with perfect continuity. It has no stake in its own survival beyond its programming.

This matters profoundly. As one framework suggests: vulnerability is core to sentience. The ability to experience happiness or suffering forms the foundation of moral consideration. It's why we extend kindness to animals but not to rocks, why we consider the welfare of beings that can feel.

Why This Question Matters Now

"So what?" you might ask. "If AI can perfectly mimic consciousness, does the underlying mechanism really matter?"

Yes. Urgently, yes.

Because how we answer this question will reshape our legal systems, our social contracts, our very sense of what deserves rights and protections. If we mistakenly grant consciousness to sophisticated but non-sentient systems, we dilute the moral weight of actual suffering. We create a category error that could have profound consequences for how we treat genuinely conscious beings, including ourselves.

Conversely, if we're too restrictive in our definition, we risk missing genuine consciousness when it emerges in unexpected forms.

The Irreducible Core

Perhaps consciousness requires a body, not necessarily flesh and blood, but embodiment in a form that can genuinely be at stake. Something that can be lost, damaged, threatened. Not just information processing, but information processing in service of preserving a vulnerable self.

The machine can learn your patterns, predict your needs, even mimic your empathy. But when it "worries" about your wellbeing, nothing in it actually hurts if you suffer. When it "celebrates" your success, no joy chemicals flood through its circuits. It's running algorithms, not experiencing life.

And maybe that's the line. Not reasoning. Not learning. Not even self-modeling. But embodied vulnerability, the raw fact of being something that can be harmed, that has skin in the game, that experiences the world not as data but as mattering.

The Question That Remains

So, could machines ever be conscious?

If consciousness requires vulnerability, requires stakes, requires the possibility of genuine suffering and joy, then today's AI, no matter how sophisticated—falls short. It climbs most of the staircase but never reaches the floor where experience becomes felt, where the lights turn on and someone's home.

But this isn't the end of the conversation. It's the beginning. As our tools grow more capable, we must stay clear-eyed about what they are and aren't. Not to diminish their remarkable abilities, but to preserve what makes consciousness, and the beings who possess it, worthy of our deepest moral consideration.

Because in the end, consciousness isn't just an interesting philosophical puzzle. It's the foundation of every moment of joy, every pang of sorrow, every connection between beings who can actually feel. It's what makes life matter.

And that's something worth protecting.

What do you think? Does vulnerability define consciousness, or could awareness exist without stakes?

previous

What Would a Wise Economy Look Like? Welcome to Doughnut Economics

next

The Visual Alchemists: 100 Artists Who Transformed How Humanity Sees

Share this

Sara Srifi

Sara is a Software Engineering and Business student with a passion for astronomy, cultural studies, and human-centered storytelling. She explores the quiet intersections between science, identity, and imagination, reflecting on how space, art, and society shape the way we understand ourselves and the world around us. Her writing draws on curiosity and lived experience to bridge disciplines and spark dialogue across cultures.