The Machiavellian Principles Applied In An AI Hallucination Time (Part 3)

Tue Feb 03 2026

Explore how AI hallucinations and human lies converge, and why Machiavellian strategy is essential for surviving manipulation and epistemic collapse.

AI Hallucinations and Human Lies: Surviving the Epistemic Crisis

Part 3 of 5: The Machiavellian Principles Applied In An AI Hallucination Time

This is the third article in a five-part series exploring Niccolò Machiavelli's The Prince and its profound relevance to contemporary struggles against manipulation, illogic, and the erosion of epistemic certainty in the age of artificial intelligence.

The Nature of AI Hallucination

When we speak of "AI hallucination," we describe a phenomenon where large language models generate plausible-sounding information that has no basis in reality. GPT-4 might cite a legal case that never existed. Claude might reference a scientific study that was never published. Gemini could fabricate historical events with perfect grammatical confidence.

These are not "lies" in the intentional sense, the AI has no intent, but they are fabrications nonetheless.

The parallel to the human manipulator is profound.

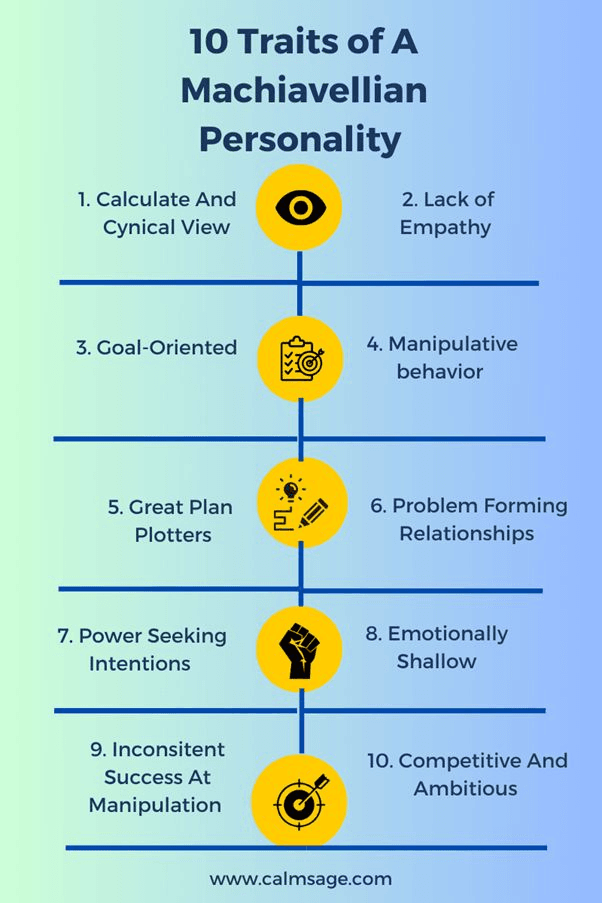

| AI Hallucination | Human Manipulation |

| Generates plausible falsehoods | Crafts convincing lies |

| No internal truth verification | No commitment to honesty |

| Confident regardless of accuracy | Delivers lies with conviction |

| Pattern-matches rather than fact-checks | Plays to audience expectations rather than reality |

| Requires human verification | Requires external fact-checking |

In both cases, the burden of verification shifts to the recipient. You cannot trust the output simply because it sounds authoritative.

The Epistemology of Confidence

Here lies perhaps the most dangerous parallel: Both AI hallucinations and human lies are often delivered with maximum confidence.

Consider a 2023 incident where a lawyer used ChatGPT to research case law for a federal court filing. The AI confidently cited six cases supporting the legal argument. All six were fabrications, complete with case names, dates, judges, and detailed holdings. The lawyer, trusting the confident presentation, submitted the brief. The opposing counsel checked the citations. None existed.

The judge sanctioned the lawyer. But the deeper lesson concerns epistemic calibration: Confidence is not evidence of accuracy.

The human liar understands this intuitively. They deliver falsehoods with unwavering certainty, knowing that hesitation breeds suspicion. "I absolutely, categorically deny..." "The facts clearly show..." "As everyone knows..."

The more outrageous the lie, often the more confident the delivery must be to overcome cognitive dissonance in the listener.

Machiavelli in the Age of AI: Synthetic Reality

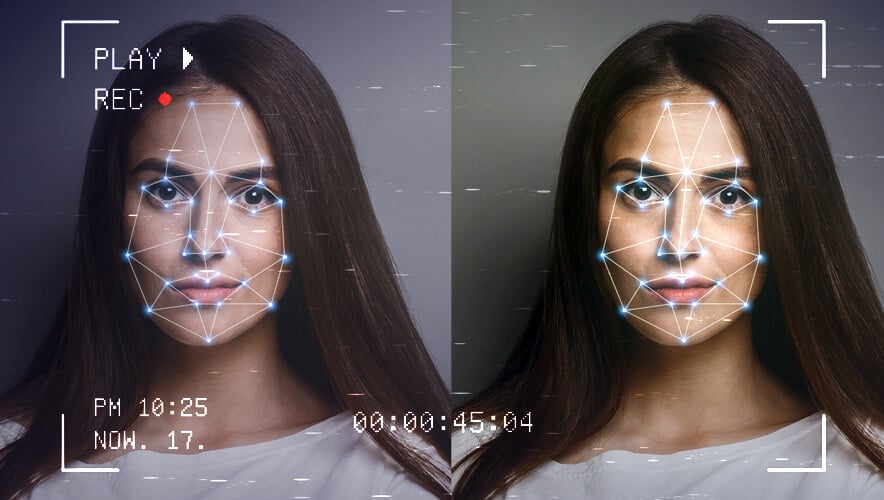

Machiavelli wrote in an age of human-only deception. What would he make of our era where deepfakes can fabricate video evidence, AI can generate thousands of fake social media accounts, synthetic voices can impersonate anyone, algorithmic amplification gives lies viral reach, and the sheer volume of information exceeds human verification capacity?

His principles translate with eerie precision.

1. The Fox Must Evolve

The fox recognises traps. In our time, the traps are digital. Verify sources through multiple channels. Check timestamps and metadata. Understand the economics of who benefits from specific narratives. Develop technical literacy in AI capabilities and limitations. Build networks of trusted verifiers.

2. The Lion Must Adapt

The lion's strength was once physical or military. Today, it manifests as legal mastery of defamation law, evidence standards, and discovery rules. It requires technical understanding of how to prove AI manipulation and authenticate genuine content. It demands institutional relationships with platforms, fact-checkers, and regulatory bodies. It builds reputational track records of accuracy that AI fabrications cannot match.

3. Reputation Becomes Cryptographic

In an age where anything can be faked, verifiable identity and cryptographic proof become the new markers of legitimacy. Digital signatures, blockchain-timestamped documents, verified accounts—these are the modern equivalent of the prince's seal and signet ring.

The Epistemic Crisis and Machiavellian Realism

We face what philosophers call an epistemic crisis, a breakdown in our collective ability to determine truth. When lies are cheap to produce, expensive to debunk, and algorithmically amplified, democratic deliberation itself becomes compromised.

Machiavelli would recognise this environment immediately. Renaissance Italy was also an epistemic wilderness, competing city-states, rival papal factions, mercenary armies switching sides mid-battle, diplomatic treaties signed in bad faith. Truth was whatever power could enforce.

His answer was not to retreat into idealism but to develop strategic competence within chaos:

"Where the willingness is great, the difficulties cannot be great." (Chapter XXVI)

In practical terms: build verification systems into your workflow, create redundancy in your documentation, establish reputation through consistent accuracy, make yourself the more reliable source than AI or human fabricators, and invest in relationships with gatekeepers who value truth.

Digital Contexts: The Threat Evolution

Social media manipulators who deploy AI-generated content, deepfakes, or coordinated disinformation campaigns represent the contemporary frontier of Machiavellian conflict.

Modern digital manipulation includes deepfake videos showing public figures saying things they never said, AI-generated "news" articles from fabricated sources, synthetic social media accounts creating false consensus, and micro-targeted disinformation exploiting individual vulnerabilities.

The Fox's Digital Literacy

Verify through multiple independent sources. Check image metadata and reverse image search. Understand AI capabilities and common failure patterns. Follow the money, who benefits from this narrative? Maintain networks of trusted verifiers.

The Lion's Digital Infrastructure

Build verified digital identity through blue checks and verified accounts. Use cryptographic signing for important communications. Create timestamped records of actual positions. Establish authoritative platforms under your control. Cultivate relationships with platform trust and safety teams.

Preemptive Defence: Building Dikes

Establish your reliable track record before attack comes. Create comprehensive digital footprint of authentic content. Build coalition of validators who can vouch for you. Document your actual positions comprehensively.

Aggressive Response to Fabrication

Deploy DMCA takedowns for impersonation. Pursue defamation suits where applicable. Engage platform reporting and appeals. Publicly expose fabricators when legally safe. Make criminal referrals for serious cases.

The Challenge: Scale and Speed

Digital platforms operate at scale. A single deepfake can reach millions before being debunked. Therefore, prevention exceeds reaction in importance. Build reputation infrastructure before attack. Rapid response limits initial damage. Direct channels to trust and safety teams matter. Legal deterrence makes fabrication expensive through aggressive litigation.

QAnon: A Case Study in Epistemic Warfare

QAnon represents a fascinating case study in how AI-era information dynamics combine with human stupidity and manipulation to create epistemic chaos.

The Phenomenon

Beginning in 2017, an anonymous poster on 4chan claimed to be a high-level government insider ("Q") revealing a secret war against a cabal of Satan-worshippers. The claims were completely fabricated, internally contradictory, repeatedly falsified by events, yet believed by millions.

The Cipolla Analysis

QAnon followers engaged in Cipolla-classified "stupid" behaviour, harming themselves through social isolation, legal consequences, and financial losses, whilst harming others through divided families, incited violence, and undermined democratic processes.

The AI Amplification

While QAnon predated sophisticated AI, it demonstrated mechanisms that AI now amplifies. Pattern-matching over truth: believers found "connections" between unrelated events, similar to how LLMs generate plausible-but-false connections. Confidence regardless of accuracy: "Q drops" were delivered with certainty, never acknowledging prediction failures. Virality over verification: social media algorithms amplified emotionally resonant content regardless of truth value.

The Machiavellian Response That Worked

Those who successfully countered QAnon in their own circles applied these principles:

- Don't Argue With the Conspiracy. Like arguing with a flood, direct confrontation strengthened belief through persecution narrative.

- Build Alternative Information Infrastructure. Create trusted sources for family members before they encounter QAnon.

- Document the Pattern of Failure. Track every failed prediction. Over time, even committed believers struggle to ignore 100% prediction failure rates.

- Remove Platforms (The Lion's Force). Social media companies eventually deplatformed major QAnon accounts, destroying the distribution mechanism.

- Legal Consequences. Prosecuting QAnon-inspired violence, such as Capitol riot participants, created real costs for adherence.

The Partial Success

QAnon's influence has waned but not disappeared. Why? Because the response was incomplete—wounded but not crushed. Some platforms remain. Some influencers rebranded. The underlying epistemic vulnerabilities persist.

The Lesson: In the AI age, bad information spreads faster than corrections. Prevention through trusted relationships and platform control beats post-hoc debunking.

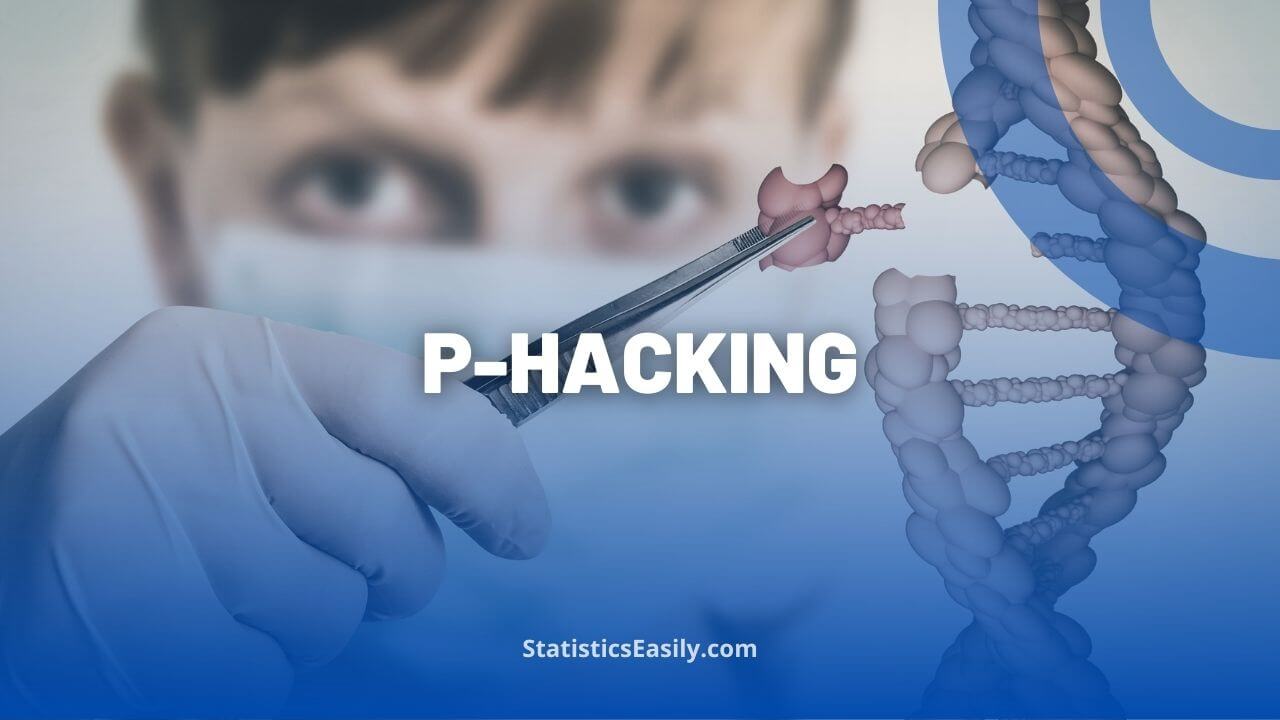

The Replication Crisis: Institutional Machiavellian Reform

The "replication crisis" in psychology and other social sciences demonstrates how institutional structures can apply Machiavellian principles to combat systemic dishonesty.

The Problem

For decades, researchers p-hacked data to find publishable results, failed to report negative findings, used questionable research practices, and made claims unsupported by their data. This wasn't necessarily conscious fraud, often it was motivated reasoning combined with publication pressure. But the result was a literature filled with unreplicable findings.

The Cipolla Classification

Many of these practices were “stupid”, harming both the researcher by building careers on false foundations and harming science through an unreliable knowledge base.

The Machiavellian Reform

Starting around 2011, reformers applied strategic principles:

The Fox Identifies the Trap. Brian Nosek and others systematically attempted to replicate famous studies. Failure rates exceeded 50%.

Documentation as Weapon. Pre-registration of studies, documenting hypotheses and methods before data collection, makes p-hacking detectable.

Institutional Force (The Lion). Major journals now require data sharing, pre-registration, replication studies, and transparency reports.

Reputation Reconstruction. Researchers who embrace open science build credibility; those who resist face increasing scepticism.

Destroying the Old System. Not reforming questionable practices, making them impossible. Technical solutions like pre-registration platforms, plus policy changes in journal requirements, plus cultural shifts valuing replication.

The Ongoing Battle

This reform succeeded because it didn't appeal to researchers' better angels—it changed incentive structures. The fox recognised the trap; the lion enforced new rules.

The Lesson: Against systemic dishonesty, moral exhortation fails. Structural change that makes dishonesty detectable and costly succeeds.

Academic Accountability: The Lion's Institutional Force

Scholars who fabricate data, plagiarise, or make unfounded claims undermine the collective enterprise of knowledge production and face Machiavellian consequences when exposed.

Academic fraud cases demonstrate the principles in action. The fox's peer review operates through pre-publication review catching errors and fraud, post-publication replication attempts verifying findings, meta-analyses revealing patterns of questionable results, and whistleblowers reporting witnessed misconduct.

The lion's institutional force manifests through retraction of fraudulent papers, loss of funding, termination of employment, loss of professional credentials, criminal prosecution for grant fraud, and civil suits from harmed parties.

Comprehensive documentation occurs via PubPeer and similar platforms documenting problems, Retraction Watch cataloguing fraud, Office of Research Integrity investigations, and university misconduct proceedings.

Recent Examples

Francesca Gino at Harvard Business School was accused of data manipulation in behavioural science studies. Harvard placed her on administrative leave, launched investigation, and she faced public exposure and lawsuit. Not a slap on the wrist, potential career termination.

Brian Wansink at Cornell had multiple papers retracted for data problems. He resigned his professorship. His work was discredited. His career ended.

Diederik Stapel at Tilburg University fabricated data in dozens of papers. He was stripped of his PhD, dismissed from his position, and became a cautionary tale.

The Lesson

Academic integrity relies on consequences, not honour codes. The system works when fraud is detected by the fox, exposed comprehensively through documentation, and punished decisively by the lion.

The AI Hallucination Era Demands Machiavellian Virtue

We live in unique times. For the first time in human history, lies can be generated at machine speed and scale, verification requires expertise most people lack, the boundary between human and synthetic content has dissolved, and trust in institutions has eroded precisely when institutional verification is most needed.

In this environment, Machiavellian principles are not optional luxuries—they are survival necessities.

- The Fox Must Verify. Every claim, every source, every confident assertion. Trust nothing on authority alone.

- The Lion Must Enforce. Consequences for dishonesty must exceed benefits. Make lying expensive.

- Reputation Must Be Earned. Through consistent accuracy over time, not through confident presentation.

- Institutions Must Protect Truth. Through structural incentives, not moral exhortation.

- Stupidity Must Be Contained. Through systemic safeguards, not reasoning with the unreasonable.

The Path Forward: Strategic Competence in Chaos

As Machiavelli himself might have observed: the tools of power change, but human nature , in its glory and its stupidity, remains constant.

Against manipulators, whether human or AI-augmented, you will face difficulties. They will lie. They will manipulate. They may temporarily succeed. But if your willingness to defend truth is great, if you combine fox-intelligence with lion-strength, if you manage appearances whilst building substance, the difficulties become surmountable.

We live in what might be called “an AI hallucination time”, an epoch where the boundary between truth and fabrication has become dangerously permeable, where manipulators deploy not merely human cunning but synthetic intelligence to amplify their deceptions.

The question Machiavelli posed in 1513 remains our question in 2026: How does one who seeks truth survive in a world dominated by those who wield lies as weapons?

The answer: Develop virtù sufficient to match the challenge. Be both fox and lion. Verify everything. Build the dikes. Make truth competitive with lies.

Continue to Part 4: "The Five Machiavellian Principles for Modern Conflict"

Series Overview:

- Part 1: The Fox and The Lion

- Part 2: The Stupidity Principle

- Part 3: AI Hallucinations and Human Lies (You are here)

- Part 4: The Five Machiavellian Principles

- Part 5: Strategic Ruthlessness in Service of Truth

previous

The Machiavellian Principles Applied In An AI Hallucination Time (Part 2)

next

Why Is There Something Rather Than Nothing?