Bridging AI Divide: How to Safely Integrate AI in Schools

Hind MoutaoikiIR&D Manager

Fri Jun 13 2025

As artificial intelligence reshapes classrooms across the world, the debate has shifted from whether to adopt the technology to how to deploy it responsibly, fairly, and with impact. With the AI-driven edtech market on track to hit $32.27 billion by 2030, the pressure to get the rollout right is strong.

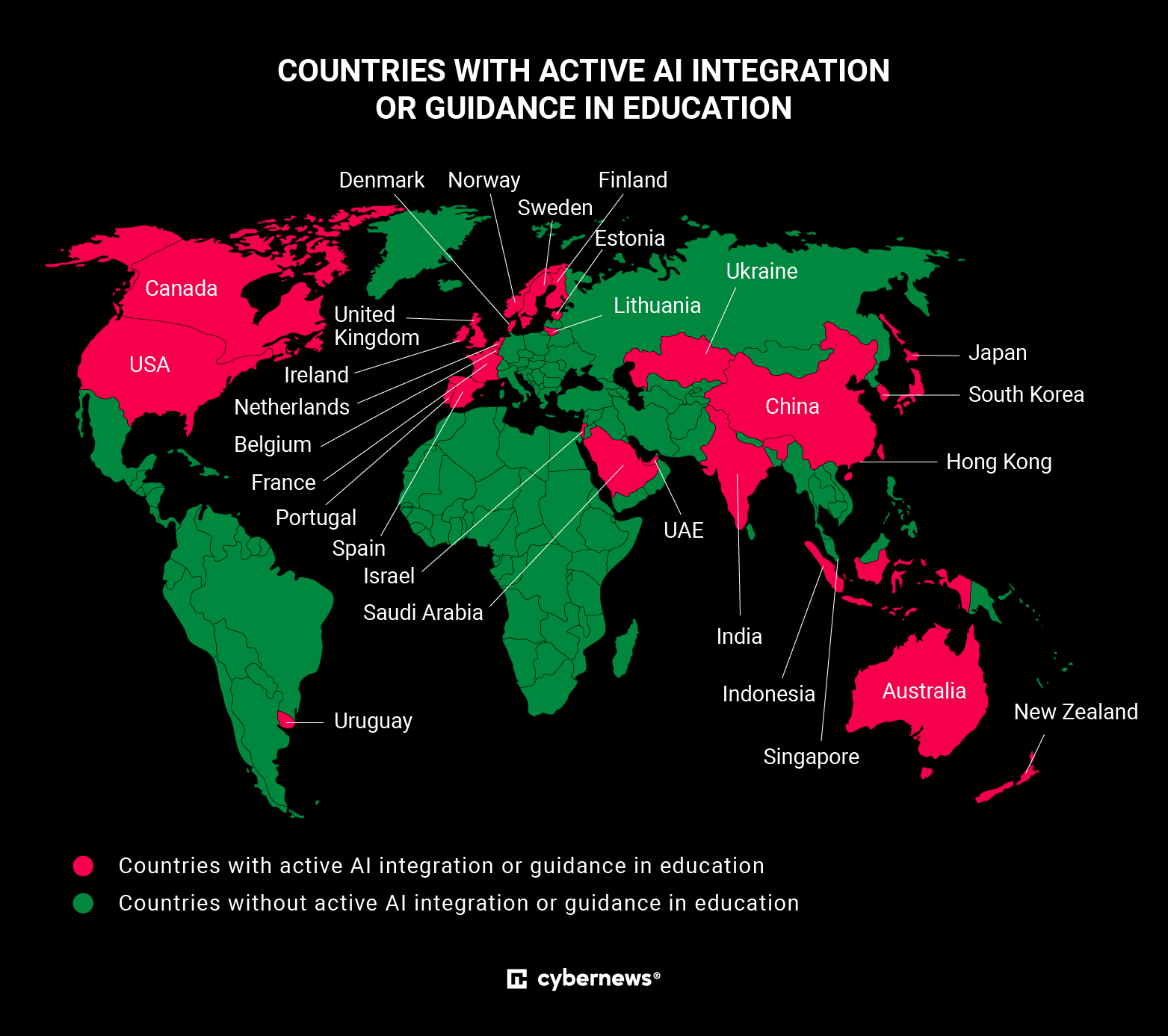

Experts caution that countries that delay introducing AI risk widening the digital skills gap. According to UNESCO, rapid progress in AI has made the digital gap even bigger, leading to what many now call the "AI divide." This can leave students less competitive internationally and exacerbate existing inequalities, particularly in regions with limited access to technology and training.

Some countries are already taking decisive steps to address these risks and bridge the digital divide. Lithuania is putting nexos.ai at the center of a major new plan. Starting this September, every secondary school in the country will receive free access to nexos.ai – an all-in-one AI platform already used by global tech companies, with a goal of helping school communities use AI tools in a safe and private way.

“We are proud that nexos.ai can help in making advanced AI tools both accessible and safe for everyone in the school community,” said Tomas Okmanas, the co-founder of nexos.ai. “By bringing a selection of advanced AI tools in one intuitive and secure window, we help teachers focus on teaching and students on learning. Our goal is to empower schools to innovate confidently, knowing that security and privacy is built in from the ground up.”

![]() Why AI Integration Is Important

Why AI Integration Is Important

AI is already changing education through the personalization of learning, automating administrative tasks, and giving teachers deeper understanding of student progress.

Nearly half of school administrators now use the technology daily, according to survey data. The main reasons are cutting administrative load (42%), adapting lessons to individual needs (25%), and boosting engagement (18%). The result is more time for teaching, less time lost to routine tasks.

Around 44% of children actively engage with generative AI, with 54% using it for schoolwork and/or homework. According to high school educators, improved student engagement is among the top benefits of AI use.

The implications of successful AI integration stretch beyond education. By 2030, 86% of employers say AI and information processing will significantly change how they operate. That’s driving a surge in demand for skills like data analysis, cybersecurity, and digital literacy.

Training, Ethics, and Access: The Real AI Homework

Successfully integrating AI into education means going far beyond installing tools – it requires building digital skills, protecting privacy, and ensuring fair access. According to Žilvinas Girenas, AI security expert at nexos.ai, this challenge is as much about people as it is about technology.

“AI in education raises real questions about ethics, safety, and equal opportunity. Without the right groundwork, we risk amplifying the very divides we hope to close. It’s not enough to hand out tools. Students and teachers must trust that these tools handle data responsibly, and that what happens in the AI system stays confidential. Security guardrails, careful handling of uploaded content, and clear use policies are now essential parts of digital literacy,” said Girenas.

He’s identified several key steps that stand out as particularly vital in Lithuania’s strategy.

1. Choose secure, privacy-compliant AI tools.

“In education, the boundary between helpful AI and risky AI is thin. AI tools must not only meet privacy standards but also guard against accidental exposure of student data. Uploading sensitive or confidential content must be shielded by guardrails – automated or manual – that make such errors nearly impossible. Without these protections, trust in AI breaks down fast, especially in schools.”

2. Invest in teacher training.

“Then it’s important to invest in professional development for teachers. We see that hands-on training and ongoing support are crucial for building AI literacy and confidence among educators and students.”

3. Integrate AI across the curriculum.

“Update the curriculum to incorporate AI concepts across subjects – not just computer science. This helps students learn how AI works in different areas, like math, science, and even history or art. When AI is part of everyday lessons, students can see how it connects to their lives and understand better how to use it safely and smartly. This way, everyone gets a chance to learn about AI, not just those who study computers.”

4. Set clear learning goals and assessment.

“Setting clear learning objectives and assessment criteria is also important. This includes defining what students should know and be able to do with AI, from critical thinking to ethical awareness.”

5. Encourage practical, creative use.

“It’s right to let students explore and create with AI, but they also need to understand the boundaries. Where does responsible use end and risk begin? Teaching this early, through real project work and case studies, prepares students not just to use AI but to question it, challenge it, and improve it. This mindset is as important as any technical skill.”

6. Evaluate and adapt for equity.

“One of the greatest risks with AI in schools is that gaps get wider, not smaller. Urban schools may advance faster than rural ones, and students with devices at home may outpace those without. Programs must include regular impact reviews to check who is left behind – and adjust resources, training, and support until no group is at a disadvantage.”

Cybersecurity at the Core

Lithuania’s nationwide AI-in-schools program puts safety first as the tools it offers are built for secure, responsible use in the classroom. It echoes what experts from global organizations have been saying: successfully bringing AI into education takes more than just handing out devices – it requires thoughtful integration into how students learn.

Cybersecurity experts see this as a positive step forward. Aras Nazarovas, security researcher at Cybernews, notes, “While the use of AI in schools is still a controversial topic, a more secure implementation, such as via tools like nexos.ai allows for better auditing, and control. This approach enables safer AI use by protecting student data and ensuring that AI wouldn’t be abused to handle tasks that students were meant to do themselves (homework, tests, assignments).”

Recent years have shown why this matters. Lithuania, like many countries, has seen a rise in cyber incidents. It recorded 63% more cyber incidents in 2024 than in 2023, partially due to a better understanding of the need to report cyber incidents. In response and in line with the EU’s NIS2 directive, Lithuania is currently tightening cybersecurity in its education sector. From October 2024, all public institutions, including schools, must connect via the state’s secure data network and appoint cybersecurity managers.

Regular staff training is mandatory, and institutions are required to implement risk-based technical safeguards. The Ministry of Education is also rolling out new guidelines for safe AI use in classrooms, with a focus on digital literacy and teacher upskilling.

“Lithuania’s approach shows that you can open doors to innovation without leaving security behind. With the right habits, safeguards, and ongoing attention, schools can make the most of AI – while keeping students’ data and futures protected,” concluded Nazarovas.

ABOUT CYBERNEWS

Cybernews is a cybersecurity news and research outlet. It provides reporting, analysis, and insights on digital threats and online safety for a global audience.

Share this

Hind MoutaoikiI

R&D Manager

Hind is a Data Scientist and Computer Science graduate with a passion for research, development, and interdisciplinary exploration. She publishes on diverse subjects including philosophy, fine arts, mental health, and emerging technologies. Her work bridges data-driven insights with humanistic inquiry, illuminating the evolving relationships between art, culture, science, and innovation.